Mosca : Visão geral

Uma visão geral do software ambisônico Mosca. Para ouvir com fones de ouvido.

Vídeo relacionado

A Telefonista

A Telefonista é uma performance processual autobiográfica e instalação sonora, de autoria da performer Simone Reis e do artista sonoro e de instalação Iain Mott. Reis dialoga, em cena, com múltiplas palavras, fontes sonoras e poéticas de informações reais e ficcionais, enfatizando um estado de liminaridade-tensões entre realidade e ficção. A personagem é bombardeada por informações autobiográficas supostamente falsas e/ou verdadeiras, (da mídia, da vida da autora,das conversas telefônicas que a personagem/autora escuta e cria dentro e fora de sua cabeça e das redes socais e antissociais instaladas em seu cérebro poroso durante o sono). A telefonista é um ser invisível porém audível? Uma presença física sonora visível? Como tornar visível/audível um corpo ausente?

O trabalho artístico incorporará uma central telefônica antiga modificada como objeto de encontro e propagação sonora e física para a performer. Esse objeto curioso, com seus ramais, interruptores e fone, funcionará como interface entre a performer e informações acústicas que entram a saem. A central é uma extensão da telefonista/autora e uma forma de inteligência em si. Facilita a audição de chamadas, ligações entre pessoas e textos, intervenções verbais da operadora e suas próprias expressões eletroacústicas surpreendentes.

A telefonista-autora está viva ou morta? Sua maquina cheia de ramais irá explodir devido ao excesso de lixo tecnológico autobiográfico que gira ao redor de seu pequeno planeta cheio de conexões verbais poéticas, patéticas e sonoras?

Essa obra inacabada rejeita o consolo da ficção pura e aponta para uma dimensão do real, revelando gestos singulares de “autor-representação”, confrontando arte e vida.

Agradecemos imensamente a colaboração técnica de Jim Sosnin e o apoio do Fundo de Apoio à Cultura, FAC- DF.

Nova versão de Mosca em breve

Uma nova versão do software Mosca será lançada em breve implementando trabalho de Thibaud Keller. O lançamento trazerá novas bibliotécas ambisônicas além de suporte para VBAP, suporte de OSC e integração com OSSIA-score, GUI melhorada, suporte para sinais ambisônicos de maior ordem, uma seleção de RIRs para cada fonte, além de várias outras melhoradas. Para detalhes sobre o próximo lançamento, veja o artigo de Iain Mott e Thibaud Keller: Three-dimensional sound design with Mosca. Veja também https://escuta.org/mosca.

Tutorial de Vídeo de Mosca

Tutorial on using the GUI interface of the Mosca quark for SuperCollider. Please listen with headphones and please view in full-screen mode.

Vídeo relacionado

Summoned Voices

Summoned Voices acts as a living memory of people and place. It consists of a series of door installations each with an intercom, sound system and a computer that is networked to a central file and database server. The design metaphor of the door presents a familiar scenario, that of announcing oneself at a doorway and waiting for a response from persons unknown. Signage instructs the public to speak, make sounds or sing into the intercom. Their voice is stored and interpreted, and results in local playback composed of the individual's voice with those that have gone before. Summoned Voices acts as an interpreter of sound, a message board and an imprint of a community - a place for expression, reflection and surprise.

Summoned Voices was premiered at the Art In Output Festival in Eindhoven, Netherlands in February 2003 and is a collaborative project by Iain Mott and Marc Raszewski. It was initiated during Iain Mott's artist residency at the CSIRO Mathematical and Information Sciences in 1999/2000 working with the Digital Media Information Systems (DMIS) research group in Sydney and Canberra. The project was assisted by the New Media Arts Fund of the Australia Council, the Federal Government's arts funding and advisory body. The Studium Generale of the Technische Universiteit Eindhoven assisted the final realisation at Art In Output.

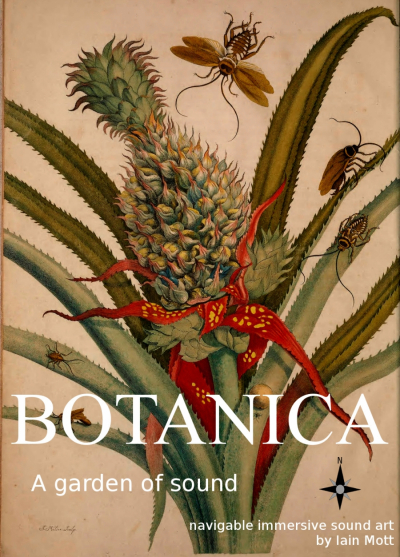

Botânica

Botânica é um trabalho de arte sonora para jardins. Participantes do público, juntamente com guia, usam fones de ouvido e levam uma pasta de ombro tecnologizada para explorar uma composição sonora imersiva sobreposta na paisagem. Os sons escutados são em grande parte confeccionados da natureza e envolvem gravações de áudio do cerrado do centro-oeste brasileiro. Enquanto os participantes percorrem o jardim, seus movimentos são acompanhados por GPS e isso lhes permite ouvir uma variedade de sons mapeados para diferentes locais. As paisagens sonoras encontradas no caminho são tri-dimensionais e um aparelho de head-tracking nos fones de ouvidos facilita uma imersão interativa responsiva aos movimentos mais finos do ouvinte. Os sons podem ser fixos no espaço, agindo como balizas, ou podem seguir sua própria trajetória através do jardim. Os fones de ouvidos são de um tipo aberto, permitindo que os sons naturais do meio ambiente se misturam com os da sobreposição.

Botânica é apresentado como uma fantasia sonora. É inspirado pela paisagem, a biosfera e os elementos e é devolvido ao ambiente de forma a ser explorado em um cenário específico, o jardim, outra fantasia de e para a natureza. É uma composição sonora organizada no espaço em vez do tempo e, como tal, interage diretamente com o meio ambiente e com a presença humana.

Desde o início dos anos 90, o artista sonoro Iain Mott (UK / AU) tem sido envolvido no mapeamento espacial do som em vários contextos. Seu trabalho de 1998 Sound Mapping feito com o engenheiro Jim Sosnin e designer Marc Raszewski é particularmente relevante para o projeto atual. Entre as primeiras obras de arte a utilizar GPS, Sound Mapping foi selecionado para exposição no prestigioso Prix Ars Electronica do mesmo ano em Linz, Áustria. Radicado no Brasil há uma década, ele é colaborador regular com a atriz Simone Reis e atualmente professor adjunto na área de voz e sonoplastica no Departamento de Artes Cênicas da Universidade de Brasília. Botanica é um resultado de sua pesquisa no campo de ambisonics (uma forma um surround sound desenvolvido pela primeira vez no Reino Unido na década de 1970) e especificamente o projeto Cerrado Ambisônica que envolve o uso de gravações de campo ambisônicas do cerrado em contextos teatrais. Seu software de open source, Mosca (uma classe de extensão ambisonic para a linguagem de música SuperCollider), foi criado na pesquisa e vai formar a base técnica da Botânica.

Projeto apoiado pelo MCTI/CNPq no Edital Universal Nº 14/2013.

Mapa Ambisônico

Mapa ambisônico com gravações de campo em B-format de alta qualidade para baixar (48kHz, 24bit, formato wav). Cada registro está acompanhado com mixagens de estéreo UHJ e binaural para ouvir diretamente online. O mapa foi produzido como parte do projeto Cerrado Ambisônico.

Bruna Martini: Ganhadora do prêmio de Melhor Atriz, FESTU-RIO na peça "Stanisloves-me" - Direção: Simone Reis

Postado por Simone ReisBruna Martini: Ganhadora do prêmio de Melhor Atriz, Mostra Cometitiva Nacional, FESTU-Rio na peça "Stanisloves-me". Simone Reis: Indicação, Melhor Direção.

"Stanisloves-me"

Atuação: Bruna Martini

Direção: Simone Reis

Concepção e Dramaturgia: Simone Reis e Bruna Martini

Textos: Hilda Hilst, Bruna Martini e Simone Reis

B-Format para Estéreo Binaural & UHJ

Use the three scripts contained in the zip file below in "Download attachments" to batch convert a directory of B-format audio to binaural and UHJ stereo. Requires that SuperCollider is installed with the standard plugins and that the "Ctk" quark is enabled. The main shell script also encodes mp3 versions of the binaural and UHJ files and if this feature is used (not commented out), the system will require that "lame" is installed. The SuperCollider script uses the ATK and is taken directly from the SynthDef and NRT examples for ATK.

Unzip the scripts in a directory, edit the paths to match your installation and distribution of Linux in the file renderbinauralUHJ.sh, make this file executable and run in the directory containing the b-format files to perform the conversions.

Criando Respostas ao Impulso com Aliki

The following procedure shows how to make B-format impulse responses (IRs) with the Linux software Aliki by Fons Adriaensen. A detailed user manual is available for Aliki, however the guide presented here in escuta.org is intended to show how to produce IRs without the need to run the software in the field and enables the use of portable audio recorders recorders such as the Tascam DR-680. The procedure was arrived upon through email correspondence with Fons. His utility "bform2ald" is included here with permission.

Field Equipment used:

- Zoom H4n - for sine sweep playback

- Core Sound Tetramic, stand and cabling

- Tascam DR-680 multi-channel recorder

- Yorkville YSM1p powered audio monitor with cabling to play Zoom H4n output

- 12V sealed lead acid battery and recharging unit

- Power inverter to suit monitor

1. Launch Aliki in the directory in which you wish to create and store your "session" files and sub-directories, select the "Sweep" window and create a sweep file with these or other values:

- rate: 48000

- fade in: 0.1

- start freq: 20

- Sweep time: 10

- End freq: 20e3

- Fade out: 0.03

2. Select "Load" to load the sweep into Aliki and perform an export as a 24bit wav file or file type of your choosing.

3. Import the "*-F.wav" export in Ardour or other sound editor and insert an 800Hz blip or other audio marker 5 seconds before start. Insert some silence before the blip as some players (the Zoom H4n for example) may miss some initial milliseconds of files on playback. Export file as stereo 24bit 48kHz stereo file since the Zoom doesn't accept mono files.

4. Import file into Zoom H4n recorder for playback.

5. In the field, connect line out of Zoom H4n to Yorkville YSM1p and play file, recording with tetramic and Tascam DR-680. In my first test I recorded with the meter reading at around -16dB. Could have given the amp more gain, but the speaker casing was beginning to buzz with the low frequencies.

6. The Tascam creates 4 mono files. Use script to convert to A-format and with Tetrafile to convert to B-format with the mic's calibration data (with "def" setting).

7. Install and use the utility bform2ald (see "Download attachments" below) to convert the B-format capture to Aliki's native "ald" file format.

8. Load the "ald" sweep capture into Aliki. Enter into edit mode and right-click to place a marker at the beginning of the blip. Use the logarithmic display to make the positioning easier. Once positioned, left-click "Time ref" to zero the location of the blip, then slide the marker to the 5 second mark and again left-click "Time ref" to zero the location of the start of the capture.

9. Right-click a second time a little to the right of the blue start marker. This will create a second olive coloured marker, marking the point at which a raised cosine fade-in starting at the blue marker will reach unity gain. When positioned, left-click "Trim start". Zoom out and drag the two markers to the end of the capture in order to perform a fade out in the same way with "Trim end". Use the log view to aid with this process.

10. Save this trimmed capture in the edited directory with "Save section".

11. Select "Cancel" and then "Load" to reload the freshly trimmed capture in the edited directory, then select "Convol". In this window, select the original sweep file used to create the capture in the "Sweep" dialogue. Enter "0" in the "Start time" field and in the "End time" field enter a number in seconds that represents the expected reverberation time plus two or three more seconds. Finally, select apply to perform the deconvolution, then perform a "Save section" to save the complete IR in the "impresp" directory.

12. Select "Cancel" and "Load" to load the recently created impulse in the "impresp" directory, then enter edit mode. The impulse may not be visible so use the zoom tools and in Log view, identify the first peak in the IR which should appear shortly after 0 seconds. This peak should represent the direct sound. While we may decide not to keep this peak, we will use it now to normalise the IR so that a 0 dB post fader aux send to the convolver will reproduce the correct ratio of direct sound to reverberation when using "tail IRs" or IRs without the direct impulse (see 13 below). To normalise, right-click to position the blue marker on the peak then left-click "Time-ref" to zero the very start of the direct impulse and shift-click "Gain / Norm".

13. The complete IR created above in step 12, containing the impulse of the direct signal as well as those of the first reflections and of the diffuse tail, may be convolved with an anechoic source to position that source in the sound field. If used in this way, the "dry" signal of the source should not be mixed with the "wet" or convolved signal and there will be no control over the degree of reverberation. If however the first 10msec of the IR are silenced (using the blue and olive markers and "Trim start" in Aliki to fade in from silence just before 10msec, for example), the anechoic signal may be positioned in the sound field by including the dry signal in the mix (panned by abisonic means to a position corresponding to that of the original source in the IR) and varying the gain on the "wet" or convolved signal to adjust the level or reverberation and reinforce the apparent position of the virtual source through first reflections encoded in the IR. Another alternative is to silence the first 120msec of the IR to create a so-called "tail IR". This removes the 1st reflections information entirely from the IR and enables the sound to be moved freely by ambisonic panning. The level of reverberation is adjustable however the will be no 1st reflections information to aid in the listener's localisation of the virtual source or to contribute to the illusion of its "naturalness". A fourth possibility is to use a tail IR in conjunction with various IRs for different locations. These IRs encoding first reflections only, those occurring between 10 and 120msec, could be chosen for example to match the positions of specific musicians on a stage. The engineer will first pan the dry signal of a source in a particular position, then mix in the wet signal derived from convolution with the 1st reflections IR for the corresponding location and additionally send a feed from the dry signal to a global tail IR common to all sources.

Mais ...

Produção de Arquivos de B-format com Core Sound Tetramic & Tascam DR-680 no Linux

The script and other configurations detailed on this page convert mono files generated by a Tascam DR-680 with a Core Sound Tetramic soundfield microphone to B-format 4-channel wav files. It requires that the Tascam DR-680 is configured to save recordings as mono sound files on channels 1, 2, 3 & 4 and that these channel numbers match the corresponding capsules on the Tetramic. The script also requires that Tetramic calibration files are installed (see below) and the additional installation of the following programs by Fons Adriaensen:

jconvolver-0.9.2.tar.bz2 (includes the necessary utility "makemulti")

Fons Adriaensen provides a free calibration service for Tetramics which generates calibration files specific to each microphone based on data provided with the microphone on purchase. See"TetraProc / TetraCal" and "Calibration service for Core Sound's TetraMic" on this page for further information.

Run the script in a directory containing the mono files. Change paths and configuration filenames in the script as necessary. Use the command line argument --elf to enable extended low frequency response in the b-format output (-3dB at 40Hz) or none to use the default roll-off at 80Hz.

The B-format script is contained in the attachment "mono2bformat.zip" below. Alternatively, copy the following code:

#!/bin/bash

#Converts dated mono files generated by a Tascam DR-680 with a Coresound Tetramic ambisonic microphone to B-format 4-channel wav files. Run this script in directory containing the mono files. Change paths as necessary. Use the command line argument --elf to enable extended low frequency response in the b-format output (-3dB at 40Hz) or none to use the default roll-off at 80Hz.

if [ "$1" = "--elf" ]; then

config="elf"

else

config="def"

fi

[ -d aformat ] || mkdir aformat

[ -d bformat ] || mkdir bformat

for file in *.wav

do

base=${file:0:11}

channelnumber=${file:15:1}

if [ "$channelnumber" = "1" ]; then

command="/usr/local/bin/makemulti --wav --24bit $file"

fi

if [ "$channelnumber" = "2" ]; then

command="$command $file"

fi

if [ "$channelnumber" = "3" ]; then

command="$command $file"

fi

if [ "$channelnumber" = "4" ]; then

command="$command $file $base"

suffix="a-format.wav"

command=$command$suffix

$command

aformatfile=$base$suffix

mv ./$aformatfile ./aformat

if [ "$config" = "elf" ]; then

suffix="b-format_elf.wav"

else

suffix="b-format.wav"

fi

bformatfile=$base$suffix

if [ "$config" = "elf" ]; then

/usr/local/bin/tetrafile --fuma --wav --hpf 20 /home/iain/.tetraproc/CS2293-elf.tetra aformat/$aformatfile bformat/$bformatfile

else

/usr/local/bin/tetrafile --fuma --wav --hpf 20 /home/iain/.tetraproc/CS2293-def.tetra aformat/$aformatfile bformat/$bformatfile

fi

fi

done

rm -r aformat

exit 0

Mosca: Demonstração de áudio

Postado por Iain MottA binaural demonstration of the Mosca SuperCollider class using the voice of Simone Reis reciting from the drama Gota d'Água, B-format recordings of a Chinook helicopter and of Spitfires by John Leonard, B-format recordings of insects and frogs from Brasilia and Chapada dos Veadeiros, a galloping horse (spatialised mono source with Doppler effect and Chowning-style reverberation) and some Schubert (stereo). Binaural decoding performed with the CIPIC HRTF database's subject ID# 21, included in the ATK.

Please listen with headphones and ensure that they are correctly orientated.

Vídeo relacionado

Mosca: Classe de SuperCollider para campos sonoros ambisônicos dinâmicos

Mosca é uma classe de extensão da linguagem SuperCollider para sonoplastia 3-dimensional e realidade aumentada. A classe facilita codificação de sinais ambisônicos até a 5ª ordem e oferece diversos métodos para decodificar as cenas acústicas para conjuntos de caixas de som ou fones de ouvido.

As fontes sonoras para serem espacializadas podem ser de vários tipos. Por exemplo, podem ser arquivos de áudio ou sinais em mono, estéreo ou formato ambisônico. A posição das fontes na cena acústica, os movimentos delas e todos os controles da interface, podem ser gravados para permitir várias camadas de controle das fontes. Além dos mecanismos nativos para gravar o estado da cena, existe uma interface com o sequenciador de multimídia OSSIA Score para registrar todos os aspectos do GUI e para sincronizar a cena acústica com outras mídias (ex. vídeo, iluminação DMX) via mensagens OSC. Tais gravações permitem o uso de Mosca sem GUI em aplicações embutidas.

Mosca responde à orientação e localização do ouvinte na cena através do GUI e sensores tais como headtrackers (sensores de orientação) e tecnologias locativas, por exemplo GNSS/GPS via mensagens do padrão NMEA. Para dar referência visual para as cenas, Mosca facilita a importação de mapas e gráficos e a criação de anotações textuais e desenhos nestas imagens.

Devido à sua integração com a linguagem SuperCollider, a cena acústica pode ser manipulada por código para facilitar movimentos complexos e precisos. Além disso, processos de síntese dentro do SuperCollider, podem ser incorporados como uma fonte e receber todos os dados do GUI para modular o som. Reverberação é um efeito importante na simulação de espaço e distância de uma fonte, e Mosca facilita dois mecanismos. Um dos mecanismos é uma reverberação local, configurado por fonte, espacializado junto com a fonte e que fica mais proeminente com distância. O outro é uma reverberação global que toma influência com fontes próximas e tem um efeito mais imersivo. Além de dois reverberadores de padrão, reverberação ou outros efeitos podem ser aplicados pela convolação com repostas ao impulso ambisônicas fornecidas pelo usuário.

Recursos:

- O código fonte de Mosca é disponível para baixar: https://github.com/escuta/mosca

- Vídeo sobre Mosca

- O artigo Three-dimensional sound design with Mosca de Iain Mott e Thibaud Keller

- Instalação do Mosca no Windows

Escuta.org

Escuta.org reúne projetos de arte sonora e performance de Simone Reis e Iain Mott, junto com outros artistas e técnicos colaboradores, incluindo Marc Raszewski, Jim Sosnin, Nelson Maravalhas, estudantes do Departamento de Arte Cênicas da Universidade de Brasília (UnB), entre outros.

Para ver os projetos individuais, consulte projetos no menu acima. Os projetos são divididos em cinco grupos: 1) instalação sonora e cênica de Mott, Reis e outros 2) performance de Simone Reis 3) arte sonora e composição musical de Iain Mott 4) pesquisa de Iain Mott 5) projetos pedagógicos de Reis e Mott na Universidade de Brasília.