Projects (21)

A voice means this: there is a living person,

throat, chest, feelings, who sends into the air this voice,

different from all other voices.

A voice involves the throat, saliva, infancy, the patina of experienced life,

the mind’s intentions, the pleasure of giving a personal form to sound waves.

— Italo Calvino

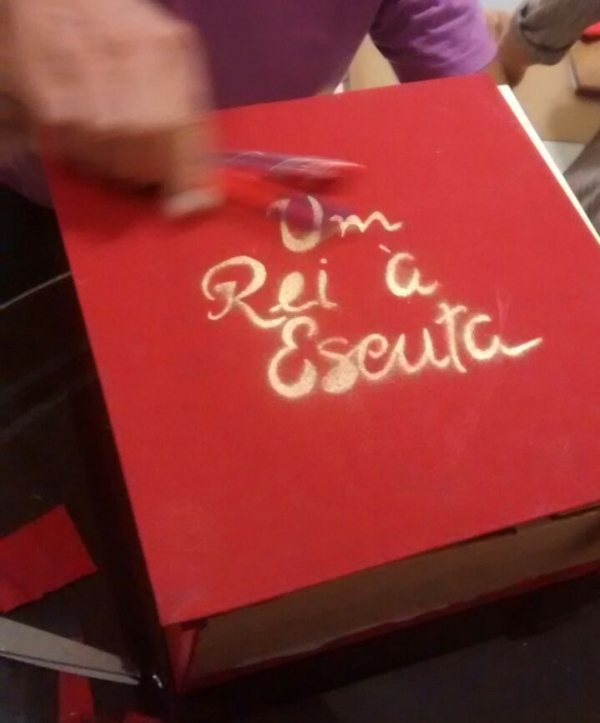

Acoustic theatre for headphones of Italo Calvino's story A King Listens (Um Rei à Escuta in Portuguese) by the Laboratório de Performance e Teatro do Vazio (LPTV), research and extension group of the Instituto de Artes (IdA) of the Universidade de Brasília (UnB), linked to the Decanato de Extensão (DEX) and to the Departamento de Artes Cênicas, UnB.

Actors/voices:

Déborah Soares

Felipe Fernandes

Rogério Luiz

Direction, audio production and sound design:

Iain Mott

Binaural processing and mixing performed with the Soundscape Renderer (SSR), Puredata, Ardour 3, Jconvolver and the Jack Audio Connection Kit.

References

CALVINO, Italo. Um Rei à Escuta. In: Nilson Moulin (Trad.); Sob o Sol-Jaguar [Sotto il sole giaguaro, 1986]. p.57–89. São Paulo: Companhia das Letras, 1995.

* See also more complete version in Portugues:

Spatial-acoustic research on the cerrado from the perspective of the performing arts

Professor responsible: Dr. Iain David Mott

Departamento de Artes Cênicas (CEN)

Universidade de Brasília (UnB)

Project approved by the MCTI/CNPq in the Edital Universal Nº 14/2013.

Objectives

To study the acoustics and soundscape of the landforms and vegetation types of the national park Chapada dos Veadeiros and produce virtual acoustic environments from the investigations with applications in the performing arts. The project will emphasise the human voice in particular and its integration with the soundscape of the cerrado. Raw data from the field research will be used in the creation of new artworks and made available on the Internet to encourage dialogue between disciplines and new research. More generally, the project intends to feature the cerrado from an audio perspective. The project will welcome the participation of the public from the regional town of Alto Paraíso and will form part of the activities of research and extension of the collective Audiocena, part of the Laboratório de Performance e Teatro do Vazio (LPTV), of UnB. Cerrado Ambisônico will produce works of theatrical installation and radiophonic art, contributing to the education of undergraduate students of Artes Cênicas at the Universidade de Brasília from a interdisciplinary perspective.

Methodology

The approach of the Canadian derived movement of acoustic ecology will be the conceptual base of the project, examining the acoustic elements of a designated place as a dynamic system of information exchanges. Impulse response measurements will be made to facilitate the simulation of the acoustics of the environment by way of an associated process of convolution. Moreover, the techniques of ambisonic recording and binaural recording will enable the reproduction of soundscapes and their acoustics on headphones or multichannel systems of loudspeakers.

* Ambisonic Cerrado. Cerrado is the general term for the savana-like vegetation of central Brazil. The cerrado is extensive, occupying an estimated 2 million km² of land and has great biodiversity.

O Espelho (The Mirror) is a theatrical sound installation inspired in part by the story of the same name by 19th century Brazilian writer Machado de Assis. It is by sound artist Iain Mott and performer Simone Reis and includes objects and paintings by visual artist Nelson Maravalhas and video by Alexandre Rangel. O Espelho investigates the games of deception we play with our identities using a variety of contemporary audio technologies, video and visual illusions from the 19th century, together with pre-recorded performance and electroacoustic composition. The installation is devised for a solitary viewer in keeping with the isolation experienced by the protagonist in the original story. Of great importance in this work its investigation of how sound, voice and listening can function ontologically side by side with visual representations of the self.

The characters played by Simone Reis are projected as "pepper's ghosts" in a false-room adjoining the publicly accessible installation space. This 19th century theatre technique involves angled glass positioned on a stage or diorama to provide the illusion that the space is occupied by a phantom figure. In our installation, the false-room is identical to the installation-space, however all furniture as well as pictures and objects on the walls by Nelson Maravalhas are arranged in mirror-image. The gallery visitor sees the projected video images and the false-room as if they were a reflection. This is by way of a false-mirror, a simple pane of glass in a frame. An "audio spotlight" (a special loudspeaker) is used to produce what are experienced as "in-head" sounds by the participant. These sounds principally correspond to the voice of the false-reflection played by Simone. Other sounds representing the memories, thoughts and fantasies of the characters (and by implication, the visitor), surround the seated listener and are projected in the space by what is known as an "ambisonic" sound system, consisting of multiple loudspeakers.

CCBB, Brasilia, 14/7 - 16/9, 2012 - Galeria de Artes Van Gogh, Sobradinho, 21/9 - 21/10, 2012 - Teatro Newton Rossi, SESC Ceilândia, 26/10/2012 - 26/1/2013.

Credits

O Espelho is by sound artist Iain Mott and actress/performer Simone Reis in collaboration with others including: video maker Alexandre Rangel, visual artist Nelson Maravalhas, playwright Camilo Pellegrini, lighting designer and arquitect Jamile Tormann and costume designers Cyntia Carla and Simone Reis. The production is by Alaôr Rosa and Arte Viva Produções with funding from the Fundo de Apoio à Cultura (FAC) of Brasilia and the Centro Cultural Banco do Brasil (CCBB).

Installation concept: Iain Mott

Artistic directors: Iain Mott and Simone Reis

Script: Simone Reis, Iain Mott and Camilo Pellegrini

Curators: Simone Reis and Iain Mott

Performance: Simone Reis

Stage direction: Simone Reis and Iain Mott

Composition and sound design: Iain Mott

Video: Alexandre Rangel

Computer programming: Iain Mott

Lighting design: Jamile Tormann

Paintings and objects: Nelson Maravalhas

Photography: Mila Petrillo/Rayssa Coe/W. Hermusche

Direction assistant: Luara Learth

Make up: Raphael Balduzzi

Assessoria de Comunicacao: Rodrigo Machado, Territorio Cultural Assessoria de Comunicacao

Project coordination, production and director of production: Arteviva Produções/Alaôr Rosa e Fernanda Oliveira

Executive producer: Nalva Sysnandes

Acknowledgements

The following people and groups gratefully acknowledged for their contribution toward the conception or development of the project:

Antonia da Silva Reis, Carlos Lin Silva, Celso Araújo, Cláudia Gomes, Dalton Camargos, Davi Reis, Departamento de Música e Departamento de Artes Cênicas do Instituto de Artes da UnB, Fons Adriaensen, Greg Schiemer, Guilherme Reis e Espaço Cena, Guillaume Potard, Jim Sosnin, Luís Augusto Jungmann Girafa, Marc and Elizabeth Raszewski, Marília Panitz, Núcleo de Vivência da UnB, Odila Bohrer, Olive Maureen Mott, Renata Azambuja, Ros Bandt, Stephen Barrass, Zé Celso Martinez Correia

Software

Puredata with iem_ambi, iemlib, iemgui, pduino, ggee, zexy and room_sim_2d, as well as Jack, Ardour, Ambdec, Jconvolver, Qutecsound, Rosegarden, Xjadeo and Final Cut Pro.

Hardware

Linux computer, LCD TV, Lanbox/dimmers/lights, Bose FreeSpace loudspeakers, Holosonics Audio Spotlight, RME Multiface, Netgear router, Arduino and pressure sensor.

A New Method for Interactive Sound Spatialisation

Posted by Iain Mott* Published in Proceedings of the 1996 International Computer Music Conference.

Iain Mott

Conservatorium of Music

University of Tasmania

Jim Sosnin

School of Arts and Media

La Trobe University

Abstract

This paper describes a new interface for interactive sound spatialisation by the public over three-dimensional (3-D) loudspeaker arrays. The interface uses ultrasound to implement an adaptation of F. R. Moore's General Model for Spatial Processing of Sounds and offers clear ergonomic and computational advantages over existing strategies. It is designed for use by non-trained individuals but could be equally useful in a performance context especially where the performer is remote from the projected sound field.

1 Introduction

The design of expressive public interactive music installations obligates an approach centred on guiding the public through specific sets of activities. An activity-based approach is necessary to elicit appropriate music generating behaviour from participants. Physical restrictions on the actions of participants are generally needed to keep behaviour within functional bounds. In addition, where adequate restraint of behaviour is unfeasible, it may also be necessary for designers to rationalise the sensitivity of sound generating mechanisms.

First-time users of interactive installations may not fully recognise the relationship between their own actions and the resulting sound. Hence, designers of public interactive works must establish potent techniques to facilitate rapid cognition in participants. It is important that participants are aware of the consequences of their actions, as it is through informed behaviour that composers can best communicate aesthetic material in an interactive context.

This paper will discuss a new method for interactive sound spatialisation by the public. The system has been devised for The Talking Chair [Mott, 1995], an interactive sound installation that enables non-trained individuals to manipulate compositional algorithms and control the 3-D motion of a virtual sound object around their body. We have developed a design with strong spatialised visual reference points to aid the first-time user in interactive sound spatialisation. While the interface is designed to control both music generating algorithms and the spatialisation of sound objects, discussion of the compositional element is beyond the scope of this paper.

2 Earlier Design

Our current work is performed as part of an ongoing investigation into interactive music. In collaborations with designers and animators we have produced works exploring spatial sound manipulation by the public. Squeezebox was made after The Talking Chair in 1994 and was produced in part to examine the use of restricted movement in public interaction. Unlike The Talking Chair which allows unhindered spatial gestures within a given region, the Squeezebox interface utilises four pneumatic pistons to insure smooth gestures from the public.

The impetus for designing a new spatial controller came from a need to readdress human factors associated with The Talking Chair. The first 1994 version of the installation (Figure: 1) implemented John Chowning's [1977] theories of moving sound simulation and used an ultra-sound wand device as an interface. A single seated participant moved the wand through a cubic zone above the lap to control the motion of sound. Ultra-sound pulses transmitted from the wand were picked up by three fixed receivers and gestures were mapped in the form of cartesian coordinates. A mirrored display cabinet was situated in front of the listener as a visual aid to the spatial navigation of sound. The viewing cabinet, using a magic box technique [Popular Mechanics Co., 1913], displayed the illuminated wand tip as a glowing ball moving about a reflected image of the participants head. The position of the ball relative to the head corresponded to the perceived position of sound in the space surrounding the listener.

Figure 1: 1994 version of The Talking Chair

Regrettably, while the magic box system worked effectively, its use required detailed written explanation and some practice. We found that few people in museum environments fully read the detailed diagrams and as a result, the cabinet often proved confusing and distracting. The complexity of the interface also lead a few individuals to believe that the cabinet itself was the object of the interaction! The magic box display, in addition, requires a degree of control over ambient lighting that is not alway practical in many public spaces. A simplification of approach was in order.

3 Methodology

In attempting to remedy shortcomings in our original human-machine interface our two main aims were to a) efficiently map the spatial gestures of participants to factors simulating the spatial motion of sound and b) provide an interface design that explicitly informs the participant of the spatial relationship between their body and the virtual sound object.

We chose F. R. Moore's [1983] General Model for Spatial Processing of Sounds as the basis for our interface design. The model is readily adaptable to a user interface using ultrasound technology and has qualities which are highly instructive in the interactive positioning of sound relative to the body.

The model was originally produced to address problems of listener perspective in multi-speaker systems by generating audio delays and amplitudes with respect to the loudspeakers rather than the listener. In this way listeners in different locations within a sound field will each receive a different perspective of the same spatial event. Loudspeakers in spatial arrays are modelled as windows in a room, the size and shape of which is determined by the number and placement of the speakers. This inner room is surrounded by a larger outer room in which illusory sonic objects may move freely. The audience is contained within the inner room and perceives virtual sound objects through the windows. Spatialisation of sound is simulated by measuring the distance of the direct and reflected sound paths from the virtual object to each window. The distances are used to calculate individual amplitudes and time delays for each loudspeaker channel.

We were attracted to the General Model, not because of issues of listener perspective but rather due to its potential to produce an interface design of great ergonomic clarity. By constructing a user interface as a physical analogue of the inner room we at once provide an efficient mechanism for real-time spatial control and strong visual tool to communicate the position of sound with respect to the listener.

4 Implementation Overview

The Sound Sphere

The interface design incorporates a small sphere suspended from a stalk in front of the listener. The surface of the sound sphere is impregnated with six ultrasound receivers each in positions directly corresponding to the position of the speakers of The Talking Chair. An alignment tube is included in the interface so participants can adjust the seat height to position their head at the centre of the sound field. An ultrasound emitting wand is moved around the sound sphere to position the sound relative to the speakers of the sculpture. Participants will be informed in a simple four stage instruction chart (Figure 2) how to interact with the sculpture. Instruction covers a) the use of the alignment device b) how to change sounds and c) how to position sound objects relative to the sculpture and consequently the body of the participant. The discovery of the remaining nuances of interaction is left to the investigation of participants.

Amplitudes and Delays The interface uses ultrasound to measure the distance from the wand to each receiver. Distance measurements are used to determine both amplitude and delay time of sound for each loudspeaker channel. Audio amplitudes are to be attenuated with a linear rather than an inverse cubic relationship [Moore, 1989] to distance, as such methods are more useful for interactive control [Ballan et. al., 1994].

The interface uses ultrasound to measure the distance from the wand to each receiver. Distance measurements are used to determine both amplitude and delay time of sound for each loudspeaker channel. Audio amplitudes are to be attenuated with a linear rather than an inverse cubic relationship [Moore, 1989] to distance, as such methods are more useful for interactive control [Ballan et. al., 1994].

With our implementation of the General Model, only the direct (not reflected) paths from the wand to each receiver are measured. While the omission of reflected paths represents a simplification of the model, a further complexity results from the distortion of the sound field caused by the sound sphere.

In the General Model, windows with direct sound paths obstructed by walls receive signal levels of zero. In cases where a window is suddenly shadowed by an obstructive wall, signal levels decline sharply and it is necessary to interpolate between amplitude values in order to avoid audible clicks [Moore, 1989]. With our interface design part of the ultrasound signal is diffracted around the surface of the sound sphere. Smooth amplitude transitions occur as windows (receivers) become gradually shadowed. Distance measurements from the wand to shadowed receivers already include the extra path lengths resulting from the sound wave following the curve of the surface, and are thus a truer representation in this context.

While it is possible for us to achieve stable amplitude measurements at each receiver, we will not however map these readings to loud speaker amplitudes. Our transmitter is not fully omnidirectional and consequently models a sound object with a directional radiation pattern. In addition to the inverse square nature of the response, such directionality would render the interaction unsuitable to non-trained usage. A mechanism of this type is however worthy of continued investigation in a performance context.

Hardware

Reverberation is to be implemented using off-the-shelf effects units and the level will be determined according to the distance of the wand from the sound sphere. As in the 1994 version of The Talking Chair we will implement John Chowning's local and global reverberation techniques [1971] which we have found to be highly efficient and effective in simulating changes in distance.

The attenuation of individual audio channels is to be performed by a custom built MIDI controlled mixer. The device which uses analog circuitry, is capable of controlling four independent sound objects in a six loudspeaker array. The device has the capacity to control the signal level of audio sent to each loudspeaker as well as controlling the level of both local and global reverberation. The mixer also contains insert points for the six individually tapped delays of one virtual object.

At time of writing, the audio delay software required for the spatialisation is being implemented on a DSP56002 processor [Motorola Inc., 1993]. Whilst the processor is an overkill for the simple delay processing required (basically, memory lookup and interpolation), it was decided to pursue this method as it is planned, eventually, to shift all of the spatial processing required for The Talking Chair and other projects onto the DSP, and eliminate most of the analog circuitry currently being used.

Ultrasound distance measurements are performed by a PIC16C84 microcontroller [Edwards, 1994] [Microchip Technology Inc., 1994]. The unit produces its output as MIDI messages which will be received by a Macintosh computer running the FORMULA music language. In addition to controlling music generating hardware, the Macintosh will control the DSP56002 and the custom mixer via MIDI.

5 Ultrasound Specification

Choice of Frequency

Ultrasound transducers, of the type readily available to us, are highly directional devices. In order for them to provide useful distance information for interactive spatialisation it is necessary to enhance their omni-directional capacity. This is to ensure that for all angles, the signal strength of a direct path to a given receiver is of a higher intensity than that of reflected signals. The 1994 ultrasound implementation used 40KHz transducers. The wand housed the transmitting transducer (Tx) and was moved through a zone monitored by three receivers. We found that by fixing a 16mm (diameter) marble in front of the Tx, sufficient reflection occurred to allow distance (ie timing) measurements to work, no matter which direction the wand was pointed in.

The original wand would be impractical in the new implementation as it is necessary for the sonar wave to be diffracted, at least partially, around a sound sphere of perhaps 80mm diameter. The new wand uses 25KHz, which has a wavelength of around 13.5mm, and although this is still much smaller than 80mm, our tests showed that there was just enough diffraction around the sound sphere for useable distance (ie timing) measurements at all but the most distant receivers. A 40KHz signal is far less useable as its shorter wavelength (8.5mm) will result in far greater reflection.

This lower operating frequency also allows the use of small (6mm diameter) electret mics as the sonar receivers. Their small size results in them having a near-omnidirectional response pattern in free air (mic diaphragm diameter is smaller than wavelength), and thus a hemispherical response when they are mounted flush on the sound sphere surface. 25KHz is somewhat above the intended operating frequency of the electret mics, which are designed for audio applications, but with sufficient amplification and appropriate bandpass filtering they are quite useable.

Modifications to 25KHz TransducersAttempts to improve the omni-directional response of 25KHz transducers using a marble to scatter the beam have been unsuccessful. This is possibly due partly to the design differences between the two transducers as well as differences in wavelength. The 25 KHz Tx radiates directly from the piezoelectric surface via a grille, the centre 9 mm of which is blocked off, allowing sound only through the surrounding annulus. An enhanced omni-directional response has been achieved through the use of a conical device (Figure 3) attached to the front of the Tx. In our design, the inner concentric cone directs the annular cross-section wave to the tip of an outer cone, where the final release to the surrounding air is via a 4mm diameter hole. This hole is much smaller than the 13.5 mm wavelength, so the radiation is nearly omnidirectional. With the ultrasound cone attached, the radiation in any direction is uniform within 6 dB, except for a shallow null of 9 dB at 165 degrees. This compares favourably with the radiation pattern measured originally on the bare transducer, which showed a variation of 26 dB (Figure 4).

Timing MeasurementsThe current implementation of the hardware uses a PIC16C84 microcontroller to generate the blips and process the received signals. It incorporates a manual adjustment of blip rate (30 to 60 mSec between blips) to allow use in different reverberant spaces. It also measures all 6 received signals near-simultaneously, so that a complete set of measurements is made at each blip, rather than having to cycle through 6 blips (and wait 6 times as long before a system response to a wand movement can be perceived).

The MIDI data byte output of the PIC is currently set as 7-bits and the resolution of distance measurements is 6mm. Data filtering techniques are to be applied in the Macintosh computer to smooth and interpolate between values.

The Tx is pulsed with bursts of 8 cycles of the 25KHz signal (each burst lasts for 0.32 mSec). This signal is generated in software, and drives the Tx from two PIC data lines via a differential summing amp. The final waveform is symmetrical, swinging ±20V, with zero average DC. Lower frequency clicks from the Tx, which had earlier been a problem, are thus almost inaudible, despite the higher power used to compensate for losses in the cone structure.

6 Summary

The new Talking Chair user interface has significant advantages over the original magic box device. The most notable advancement is the shift from a 2D frame of reference to a true 3D method. Freedom of movement is more limited with the new interface due to partial obstruction by the attachment stalk and the need to navigate the wand in close proximity to a solid surface. We believe however that the strong ability of the device to communicate notions of spatial location, far outweighs obstruction concerns in a context of public interaction.

References

[Ballan et. al., 1994] Oscar Ballan, Luca Mozzoni and Davide Rocchesso. Sound Spatialisation in Real Time by First-Reflection Simulation. Proceedings of the 1994 International Computer Music Conference, pp.475-476, 1994.

[Chowning, 1971] John Chowning. The Simulation of Moving Sound Sources, Journal of the Audio Engineering Society 19(1): pp.1-6, 1971.

[Edwards, 1994] Scott Edwards. The PIC Source Book, Scott Edwards, 1994.

[Microchip Technology Inc., 1994] PIC16C84 Data Book, DS30081C (Preliminary), Microchip Technology Inc., 1994.

[Moore, 1983] F. Richard Moore. A General Model for Spatial Processing of Sounds, Computer Music Journal 7(3): pp.6-15, 1983.

[Moore, 1989] F. Richard Moore. Spatialization of Sounds over Loudspeakers. In Max V. Mathews and John R. Pierce (Eds.): Current Directions in Computer Music Research, MIT Press, Cambridge Massachusetts, pp.89-103, 1989.

[Motorola Inc., 1993] DSP56002 Digital Signal Processor User's Manual, Motorola Inc. 1993.

[Mott, 1995] Iain Mott. The Talking Chair: Notes on a Sound Sculpture, Leonardo 28(1): pp.69-70, 1995.

[Popular Mechanics Co., 1913] An Electric Illusion Box in The Boy Mechanic, Volume I, Popular Mechanics Co., Chicago, pp.130-131, 1913.

back

(Iain Mott, Leonardo 28:1, MIT Press, 1995)

The Talking Chair is an interactive listening environment created in collaboration by designer Marc Raszewski and myself, a composer. Integrating sculpture, electronics and industrial design, the Chair immerses the listener in a 3-dimensional sound space.

The concept of the Talking Chair developed from a number of spatial sound compositions inspired by John Chowning's "Turenas", that I wrote for performer and electronics in a concert environment. I decided to make a break from large scale sound projection to a more intimate, one to one relationship with the audience. The Talking Chair focuses on the individual, avoiding many technical complications of auditorium projection. More importantly however, the Chair has enabled me to communicate to an audience, that element most rewarding to me in composing and performing the earlier pieces, the act of directly connecting with spatial sound.

We have endeavoured to sensitise the listener to the kinetic and 3-dimensional qualities of sound by producing a holistic theatre of sensation. The Talking Chair is an attempt to forge links between the ephemeral nature of music and the material world, by expressing musical process through a physical poetry. It requires the creative input of the individual to give it meaning, to perfect an imaginary universe of cause, effect and response.

Marc Raszewski's design consists of a system of interlocking steel rings, supporting a battery of six loud-speakers, a cabinet of glass and mirrors, and an anthropomorphic chair made from cast aluminium, plywood panels, and steel fittings, which forms the centre piece of the sculpture. The outer structural rings direct attention towards the chair, suggesting a metaphor of human interaction with technology.

Participants are instructed by signage to take a seat in the chair, pick up the wand located to the side of the cabinet in front of them, and move the wand through the region between their body and the lower half of the cabinet. As they do so, clusters of sound issue from the speakers, drawing invisible shapes through a spherical sound space surrounding their body. The pitch, timbre, loudness and density of the sound change with the spatial position and velocity of the wand. As an aid to the navigation of sound, the viewing cabinet displays the wand tip as a glowing ball moving about a reflected image of the participants head. The position of the ball relative to the head corresponds to the perceived position of sound in the space surrounding the listener.

The sonic landscape created within the Talking Chair is an amalgam of sampled and synthesised sounds chosen for their kinetic dynamics, and expressive potential. New sounds are selected on the basis of probability by pressing a button on the wand. Each new sound is unique in terms of its timbre, as well as its musical response to gesture, confronting the participant with unpredictable spatial strategies of interaction. The user develops a cognitive relationship with each sound, exploring 3-dimensional space with the wand to uncover its logic and sonic capacities.

The Talking Chair was exhibited at the Linden, Melbourne in March 1994.

Notes

The signal from an ultrasound transmitter situated on the wand is picked up by three distance measuring receivers, the outputs of which are digitised and converted into 3-dimensional coordinates. A computer operating the music programming language FORMULA, receives this information and uses it as an input for various compositional algorithms and to determine the spatial projection of sound. Spatial projection is achieved by attenuating the level of sound fed to each speaker and adjusting the amount of artificial reverberation, using a digitally controlled mixer capable of projecting four independent channels of sound. The sound sources are a sampling keyboard, an FM synthesiser and two channels of sound on a recordable CD.

Squeezebox incorporates spatial sound, computer graphics and kinetic sculpture. Participants manipulate the sculpture to produce real-time changes to the spatial location and timbre of the sound, as well as to manipulate digitised images. The sound and images are presented as an integrated plastic object, a form which is squeezed and moulded by participants. The artwork consists of a frame supporting four sculpted pistons on pneumatic shafts. An interactive image is displayed on a monitor beneath a one-way mirror at the centre of the sculpture. Four loudspeakers are situated at the outer four corners.The cast hands of Squeezebox invite participation. Participants grasp and press down the sculpted pieces, working against a pneumatic back-pressure to elicit both sound and image. The interaction reveals a form which has visual, aural as well as physical properties. As participants press down on the hands a sound mass is shifted from one point of the sculpture to another by pressing down on alternate pistons. Music is produced algorithmically and is derived from a set of rules which respond to the spatial location of the sound mass. The system of rules however is never static. One spatial strategy gives way to another resulting in an evolution of sound, requiring a constant readjustment of focus in the listener.

Squeezebox is collabroration between Iain Mott, Marc Raszewski and artist Tim Barrass who designed the interactive graphics. It was first exhibited in "Earwitness", Experimenta '94, ether ohnetitel, Melbourne, 1994. The project was produced with the assistance of The Australia Council, the Federal Government's arts funding and advisory body.

The Talking Chair is a listening environment for three-dimensional sound, allowing participants to control the trajectory of sound through the space surrounding their body. The work consists of a frame supporting a battery of six audio speakers, a central chair, and an ultrasound wand interface. A remote audio system is linked by cabling. Seated in the chair, participants interact with the sculpture by means of the wand which generates 3-dimensional information used to produce sound and draw its trajectory. As the sound object moves, its sonic qualities change in response to its proximity to the listener, velocity and spatial location. The physical form of The Talking Chair, in addition to fulfilling the functional requirements of spatial sound projection, serves to represent a material manifestation of kinetic sound. The sculpted chair assumes a metaphorical human presence amid the arcs and curves of the outer frame which define a dynamic spherical sound space around the listener.

The Talking Chair is a collaborative project by Iain Mott, Marc Raszewski & Jim Sosnin. It was produced with the assistance of the Australia Council, the Federal Governments arts funding and advisory body. The sculpture has been exhibited within Victoria and Tasmania in Australia and at the 1996 International Computer Music Conference in Hong Kong.