Iain Mott

Iain Mott is a sound artist, living in Brazil since 2007. He was a visiting professor in the Music Department at the University of Brasilia (UnB) from 2008 to 2011 and a lecturer (professor adjunto) in the area of voice and sound design in the Departamento de Artes Cênicas (theatre arts), UnB from 2012-2024. His sound installations are characterised by high levels of audience participation and novel approaches to interactivity. He has exhibited widely in Australia and at shows including the Ars Electronica Festival in Linz, Emoção Art.ficial in São Paulo and the Dashanzi International Art Festival and Multimedia Art Asia Pacific (MAAP) in Beijing. His most recent installation with Simone Reis O Espelho was exhibited at the Centro Cultural Banco do Brasil (CCBB) in Brasilia in the second half of 2012. Iain has received numerous awards and grants and has successfully managed innovative projects for almost 20 years. His GPS-based project Sound Mapping was awarded an Honorary Mention in the 1998 Prix Ars Electronica. In 2005 he was awarded an Australia China Council Arts Fellowship to work with the Beijing arts company the Long March Project. His work Zhong Shuo was created as part of the fellowship in collaboration with Chinese artists and was given 3rd prize in the UNESCO Digital Art Awards. The project has in addition been selected by MAAP for two further installations in Shanghai and Brisbane in 2006. Iain was artist in residence at the CSIRO Mathematical and Information Sciences in Canberra for 12 months in 1999/2000. The notion of collaboration between artist and audience has ongoing importance in Iain's work. His PhD from the University of Wollongong was supervised by Greg Schiemer and is entitled Sound Installation and Self-listening.

More information:

Squeezebox

Squeezebox incorporates spatial sound, computer graphics and kinetic sculpture. Participants manipulate the sculpture to produce real-time changes to the spatial location and timbre of the sound, as well as to manipulate digitised images. The sound and images are presented as an integrated plastic object, a form which is squeezed and moulded by participants. The artwork consists of a frame supporting four sculpted pistons on pneumatic shafts. An interactive image is displayed on a monitor beneath a one-way mirror at the centre of the sculpture. Four loudspeakers are situated at the outer four corners.The cast hands of Squeezebox invite participation. Participants grasp and press down the sculpted pieces, working against a pneumatic back-pressure to elicit both sound and image. The interaction reveals a form which has visual, aural as well as physical properties. As participants press down on the hands a sound mass is shifted from one point of the sculpture to another by pressing down on alternate pistons. Music is produced algorithmically and is derived from a set of rules which respond to the spatial location of the sound mass. The system of rules however is never static. One spatial strategy gives way to another resulting in an evolution of sound, requiring a constant readjustment of focus in the listener.

Squeezebox is collabroration between Iain Mott, Marc Raszewski and artist Tim Barrass who designed the interactive graphics. It was first exhibited in "Earwitness", Experimenta '94, ether ohnetitel, Melbourne, 1994. The project was produced with the assistance of The Australia Council, the Federal Government's arts funding and advisory body.

Pope's Eye

The composition Pope's Eye by Iain Mott was an outcome of a two week artist residency undertaken by Ros Bandt and Iain Mott in 2004 at the Melbourne Aquarium. Bandt and Mott made hydrophone recordings at the aquarium as well as recordings at Pope's Eye in Port Phillip Bay, Victoria. The aquarium recordings were subject to high levels of noise from the filtration equipment and noise reduction software was used to isolate the marine sounds. The sounds that can be heard in this composition include feeding sounds of marine life (fish and crustaceans), the sounds of fish calls, the sounds of staff divers at the aquarium, a motor boat on the bay and gannets at Pope's Eye. Other than the noise reduction, very little audio processing was applied to the recorded sound. The sounds were simply edited into a narrative form.

Pope's Eye

The composition Pope's Eye by Iain Mott was an outcome of a two week artist residency undertaken by Ros Bandt and Iain Mott in 2004 at the Melbourne Aquarium. Bandt and Mott made hydrophone recordings at the aquarium as well as recordings at Pope's Eye in Port Phillip Bay, Victoria. The aquarium recordings were subject to high levels of noise from the filtration equipment and noise reduction software was used to isolate the marine sounds. The sounds that can be heard in this composition include feeding sounds of marine life (fish and crustaceans), the sounds of fish calls, the sounds of staff divers at the aquarium, a motor boat on the bay and gannets at Pope's Eye. Other than the noise reduction, very little audio processing was applied to the recorded sound. The sounds were simply edited into a narrative form.

The Great Call

The Great Call

The Great Call is an early composition by Iain Mott made at La Trobe University. It was originally composed for the University of Melbourne Guild Dance Theatre's production of Signals in 1989. It was later performed as part of the Astra concert program for 1990-10-05 at Elm St Hall, North Melbourne with the Bell & Whistle Company. The composition is based on recordings of the homonymous vocalisations of the white cheeked gibbon (Hylobates concolor) made at the Melbourne Zoological Gardens in March 1998 on analogue tape. In the studio a pitch to MIDI tracking device was used to control an Oberheim Xpander synthesiser and a sampler. Other synthesised sounds, percussion and vocalisations were improvised and recorded by the composer on multitrack tape.

Sound Mapping: Field Recording

Unedited stereo field recording of Sound Mapping at the docks of Sullivan's Cove in Hobart, Tasmania. The players were: Catheryn Gurrin, Jody Kingston, Iain Mott and Jirrah Walker.

Sound Mapping: an assertion of place

an assertion of place *

| Iain Mott Conservatorium of Music University of Tasmania

|

Jim Sosnin |

This paper proposes an argument for the role of sound installation in addressing the physical relationship between music and the general public. The focus of the discussion is on an outdoor interactive music event titled Sound Mapping, which explores the issues raised. Sound Mapping is a site specific algorithmic composition to be staged in the Sullivan's Cove district of Hobart. Sound Mapping uses four mobile sound-sources each carried by a member of the public. These sound sources are played with respect to geographical location and participant interactions using a system of satellite and motion sensing equipment in combination with sound generating equipment and computer control. The project aims to assert a sense of place, physicality and engagement to reaffirm the relationship between art and the everyday activities of life.

1 Music and Physicality

Digital technology, for all its virtues as a precise tool for analysis, articulation of data, communication and control, is propelling society towards a detachment from physicality. In music, the progressive detachment of the populace from the act of making music has a long history with its roots in earlier technologies and cultural practice. Perhaps the most critical early development is that of the musical score which was born of a European paradigm that emphasised literary culture over oral culture. Not only does the score require the production of music by trained or professional musicians, the relationship between musical idea and sound is further abstracted with the consequential emergence of the composer, an individual distinct from performers.

The introduction of the phonograph and radio in the early Twentieth Century broke the physical relationship between performer and listener entirely. Talking films along with television have to a degree alleviated this crisis, however the gained relationship is purely visual and not physical; worse still is that performing musicians working in all such media, like screen actors (Benjamin, 1979), are denied direct interaction with their audience (and vice versa). The representation is dislocated from its origins in time and space (Concannon, 1990).

Advocates of telecommunication technology boast of the medium's ability to connect individuals with events they would otherwise not see. Live broadcasts are presented as legitimate live experience. In experiential terms however, distinctions between live and recorded broadcast are irrelevant without interaction. Even where interaction exists (eg the internet) the audience remains dislocated from the performance and experiences reduced channels of communication than they would customarily experience through a physical engagement with the event.

Issues relating to public interaction have been addressed with some success by digital technologies. Virtual reality attempts to remedy shortcomings of interaction with multi-sensory, multi-directional engagement. However, it is often performed in denial of the body, providing a substitute "virtual flesh" with which to engage with an imagined universe. It is arguable whether a truly disembodied experience is attainable, but it is nonetheless an ideal of many VR proponents (Hayles, 1996). Such disembodiment is tantamount to the postmodern vision with its obsessions of reproduction over production, the irrelevance of time and place and the interchangeability of man and machine (Boyer, 1996).

VR offers composers an extended language beyond traditional musical dimensions of pitch and texture. Issues of navigation, the relationship between sound and physical form and interaction represent a new and significant input to the musical experience. The question remains: what constitutes real experience? In an era where people are increasingly living an existence mediated by communications networks, distinctions between the real and the virtual become blurred (Murphie, 1990). While artists must engage with the contemporary state of society, they must also be aware of the aesthetic implications of pursuing digital technologies and should consider exploring avenues that connect individuals to the constructs and responsibilities of physical existence. In the words of Katherine Hayles: "If we can live in computers, why worry about air pollution or protein-based viruses?" (1996).

Problems associated with technology and the physical engagement of the public in active music-making goes beyond the mechanics of production. Monopoly share of music production by the mass media serves to reduce society's participation. Its sheer abundance is responsible for this and further, can discourage society from listening (Westerkamp, 1990).

Installation as a means to present music has the potential to address issues of time, physicality, access and engagement. In computer music, installation can strategically bridge the gap between a body of artistic research and the general public. Installation can offer tangibility and an environment in which individuals kinaesthetically engage with the work and with other individuals. Works have discrete physicality as well as a location within in the greater spatial environment. Music installation can reassert the matrix of time and space and has the capacity to anchor a potentially metaphysical musical object (pure sound) to the physical realities of life.

2 Outline

Sound Mapping is a site specific music event to be staged in the Sullivan's Cove district of Hobart in collaboration with the Tasmanian Museum and Art Gallery. The project is currently under development and will be presented by the Museum in January 1998. The project is a collaboration between composer Iain Mott, electronic designer Jim Sosnin and architect Marc Raszewski. The three have worked together previously on the Talking Chair (Mott, 1995), (Mott & Sosnin, 1996) and Squeezebox sound installation projects.

The project involves four mobile sound-sources each carried by a member of the public. Each source is a portable computer music module housed in a wheelable hard-cover suitcase. Groups of individuals will wheel the suitcases with a Museum attendant through a specified district of Sullivan's Cove (Figure 1) following a path of their choice. Each individual plays distinct music in response to location, movement and the actions of the other participants. In this way a non-linear algorithmic composition is constructed to map the footpaths, roadways and open spaces of the region and the interaction of participating individuals.

3 Communication

Data Communication

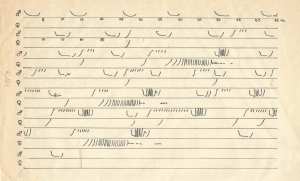

A schematic summary of the Sound Mapping communications system is provided in Figure 2. The group of modules consists of a single hub case and three standard cases. All the cases contain: battery power; a public address system; an odometer and two piezoelectric gyroscopes. The standard cases contain a data radio transmitter for transmission to the hub and an audio radio device to received a single distinct channel of music broadcast from the hub .

The hub case contains a Differential Global Positioning System (DGPS) receiver that generates spatial coordinates for the positioning of the group to an accuracy of 5 m. GPS is a worldwide radio-navigation system formed from a constellation of 24 satellites and their ground stations. GPS uses these satellites as reference points to calculate positions of GPS receivers anywhere on the earth. DGPS offers greater accuracy than standard GPS and requires an additional radio receiver that receives an error correcting broadcast from a local base station (Trimble, 1997). The Australian Surveying and Land Information Group (AUSLIG) offer subscriptions to such signals broadcast on the JJJ FM carrier signal.

The hub produces music on a four channel sound module managed by a lap-top computer in response to its location as well as motion data from itself and the three standard cases. Each channel of music is produced for a specific case. The music is broadcast to its target case by radio transmission. Motion data is generated by an odometer and gyroscopes in each case and is broadcast to the hub from the standard cases by data radio transmitters. Odometers measure wheel rotation in both directions and two gyroscopes measure tilt and azimuth.

The technical distinction between the hub and standard cases is designed to be transparent. Participants will be unaware of the leading role of the hub .

Apparent Communication

The channels and dynamics of communication are among the defining characteristics of interactive artworks (Bell, 1991). Sound Mapping is an attempt to explore modes of communication beyond those used in the Talking Chair and Squeezebox projects.

From the perspective of participants, communication will travel along four multi-dimensional pathways: a) that between participants and their respective suitcase b) between onlookers and participants c) between the environment and the group d) between the participants themselves (Figure 3).

In the first instance there exists a relationship between the kinaesthetic gestures of participants and resultant music. This communication occurs in mutual feedback.

The interaction between onlookers and participants is anticipated to be intense due to the very public nature of the space. The interaction will be musical, visual, and verbal as well as social in confronting participants with taboos relating to exhibitionism. This situation is likely to deter many people from participating but nonetheless it is hoped the element of performance will contribute to the power of the experience for both participants and onlookers.

The effect of the environment on the work marks a development from our previous installations in its capacity to dynamically signify musical elements. Music will react to the architecture and urban planning of Sullivan's Cove by means of GPS which will be used to correlate musical algorithms to specific urban structures.

Communication between participants will be verbal, visual, musical and like the relationship between the group and onlookers will feature social dimensions such as the interplay of personalities. Musical communication between individuals will be complex due to the interrelation of music algorithms specific to each case. Algorithms will use pooled musical resources (Polansky, 94) enabling participants to share structures and contribute information to elements such as rhythmic structures and timbre and pitch sets. Verbal and visual communication between participants in Sound Mapping is critical. Participants will need to establish the musical relationship that exists regarding each other and communicate to coordinate group music-making. Group decisions will need to be agreed on to seek out areas of interest within the space in relation to urban structures. It is likely participants will discuss the relationship of sound to both gesture and the environment.

4 Exploration of Music

The suitcases in Sound Mapping , as well as providing a practical solution to the transportation of music modules, serve as a metaphor for travel and exploration. This is particularly relevant to the way the composition is structured but also to the function of Sullivan's Cove. The Cove was once the major port of Hobart however the majority of heavy shipping has since left the district. The region now has dual purpose as a port and leisure district and is characterised by a major influx of tourists during the summer months (Solomon, 1976).

Composition of music for this project at present is in the planning stage however a basic structure has been devised. Navigation of the musical composition will occur on two levels: a location dependant global level and a gesture and pseudo-location dependant local level.

The global structure is determined by the DGPS receiver. As the group moves through the mapping zone the lap-top computer will use information from the DGPS to retrieve local algorithms written specifically for corresponding locations. One algorithm will be created for each of the four cases for every specified region within the zone. The borders of regions defined by the global structure will interconnect in a continuous fashion. Transitions between algorithms will occur at the global level. The rate and nature of the transitions will be decided upon regarding the aesthetics of adjoining regions. Sites contrasting sharply with adjoining regions will use rapid transitions to signify the demarcation. Adjoining regions displaying similarity will call for slow and fluid transitions.

DGPS has limited data resolution for the production of music at the local level. High resolution information is therefore needed if the system is to be responsive to the subtle gestures of participants. Local algorithms produce music directly and receive their prime input from motion sensing equipment along with transition data supplied by the global level. Motion sensors will quantify gesture but also to provide additional location information to modulate musical and gestural parameters. Gestural input will include tilt, azimuth and forward and backward velocity.

Automotive navigation systems use a combination of GPS, gyroscopes and odometers called dead reckoning to address problems of poor resolution and transmission failure (Andrew Corporation, 1996). It is possible such a system could be used to provide detailed location information for parameter mapping as well as a gestural interface supplemented by a tilt gyroscope. Dead reckoning will not be implemented however because it is difficult to guarantee standard starting conditions for all four cases and maintain accuracy over prolonged periods without greater cost in hardware development.

Instead a pseudo-location system has been contrived to track the movement of all four cases for each local region specified by the hub . While the hub remains within a given local region, the functional size of the region is determined by the actual distance standard cases stray from the hub . How can we map musical parameters to local movement if the functional size of the region is unknown? As a solution, two-dimensional motion data from the odometer and the azimuth gyroscope will be mapped to the surface of a spherical data structure with a surface area smaller then that of the local region concerned. Because this data is mapped to the continuous surface of a sphere, issues relating to boundaries become irrelevant.

5 Fact and Fiction

Sound Mapping is a mobile sound installation . This apparent oxymoron results from the fact that although physically independent of location, the work is dependant on location specific information. Sound Mapping constitutes a form of what Mark Weiser of Xerox terms embodied virtuality , a state where the body is both physical entity and a pattern of information (Hayles, 1996). In Sound Mapping , participants occupy a space that is at once both virtual sound-scape and physical environment. Their movements, as interpreted by the technology, are transmuted into digital information that interacts with their immediate location and gestures of their fellow participants.

In this way a convergence is created between the familiar home (or holiday destination) of the participant and a sonic fantasy mapping known territory. It is hoped this interaction between fact and fiction will prove resonant for participants who will interpret their home, perhaps for the first time, through a vision of sound.

The music of Sound Mapping will be constructed using concrete and synthesised sounds under the continuous control of participants and the environment. It will reflect the locations where it is produced and not necessarily represent those places. It does not function as a historical or cultural tour of a city per se but operates in reverse using the city as an energising surface. Music will on occasions strive to represent locations. It will however also be produced to contrast and challenge peoples perceived notions of place, time and motion.

Where historical references are made in the music, they will relate to history widely known by the general public. Reference will only be made if historical usage and events have a significant bearing on contemporary function.

6 Music and Architecture

Participant exploratory works employing diffuse sound fields in architectural space have been explored by sound artists such as Michael Brewster (1994) and Christina Kubisch in her "sound architectures" installations (1990). Recently composers such as Gerhard Eckel have embarked on projects employing virtual architecture as means to guide participants through compositions that are defined by the vocabulary of the virtual space (1996).

The interaction between music and architecture represents a fascinating area for new research. In contrast to architecture, music traditionally structures events in time rather than space to form what is a linear narrative. Architecture spatially arranges and compartmentalises functional components of society into integrated efficient structures. In doing so it employs visual structures that signify multiple meanings including functional purpose and social ideals.

Sound Mapping investigates a translation of both the organisational and the symbolic qualities of architecture. The relationship will be commensal rather than parasitic on architecture and will draw on the work of Young et al. (1993) which details terms of reference common to both disciplines in the areas of inspiration, influence and style. As such, music and architecture will share a complementary language of construction, elucidating functions common to both.

Signification of discrete elements of architecture is integral in the organisation of urban space. Just as people have an intuitive understanding urban design, the environment will act to aid participant's navigation and understanding of the associated musical landscape. Urban structures will suggest, among other things: musical linkages, demarcations, continuations and points of interest.

7 Hardware Implementation

Distributed processing

The hardware for Sound Mapping comprises four separate but interdependent units and requires a distributed processing approach to design. Within the hub case, the lap-top computer that controls the four synthesiser voices must process the data from the DGPS system, the hub case gyroscope and odometer readings, plus three sets of gyroscope and odometer readings sent via RF (Radio Frequency) link from the standard cases; considerable preprocessing of these data is necessary to merge them into a consistent format suitable for the lap-top's serial input port. In addition, preprocessing of gyroscope and odometer readings within each standard case is required to merge them into a consistent format suitable for the RF link.

Th PIC 16C84/04 microcontroller, manufactured by the Microchip company, has been chosen for all these preprocessing tasks in the four cases. Whilst this microcontroller does not include any dedicated serial hardware or analog conversion capabilities on the chip itself, as some other microcontrollers do, it has the overwhelming advantage of having an easily reprogrammable EEPROM program memory. This reprogrammability, without the need for ultraviolet erasure required for reprogramming conventional EPROM, allows an efficient software development cycle in this project; the alternative, of using a software simulator, is expected to be much less efficient, given the distributed, real time problems to be encountered.

Within each case, the gyroscope, which is a piezoelectric, rather than a rotational type, provides an analog voltage output. This is converted to digital form by the PIC with an external ADC chip. The odometer comprises two hall-effect sensors that respond to magnets implanted into the case wheel; the hall-effect sensor outputs are amplified and read by the PIC directly, as an essentially digital signal, and processed to extract direction as well as displacement. The final task for the PIC is to send all this data as a serial data block; no extra hardware, such as a UART, is required for this, as the PIC is fast enough to simulate this process in software.

Within the hub case, a fifth PIC is dedicated to the task of merging the DGPS, local gyroscope and odometer data with the radio data from the standard cases; this also forms a serial data block, and is sent to the lap-top.

Serial data rates

Standard MIDI (31.25 KBits per sec) is used for the final, merged data connection feeding the lap-top. This allows a maximum transfer rate of roughly 3 KByte per sec, although the average rate is somewhat lower. Initially, for consistency, it had been planned to use MIDI for the intermediate serial data block transfers also, within each standard case, but this would have required a greater bandwidth from the RF links; this is rightly discouraged by the Australian SMA (Spectrum Management Agency), especially in this low power application, where predetermined short-range frequency allocations can be used, and a specific license application is not necessary.

The DGPS system sends its data, in bursts, at 9.6 KBits per sec, so this rate is now used within the standard cases also, and allows readily available modules to be utilised for the transmitters and receivers in the RF links.

References

Andrew Corporation 1996. Autogyro Navigator , World Wide Web document: http://www.andrew.com/products/gyroscope/csgn0003.htm

Bell, S.C.D. 1991. Participatory Art and Computers , PhD dissertation, Loughborough University of Technology.

Benjamin, W. 1979. "The Work of Art in the Age of Mechanical Reproduction". In G. Mast & C. Marshall (Eds.): Film Theory & Criticism , Oxford University Press, New York, pp. 848-870.

Brewster, M. 1994. "Geneva By-Pass". In Martin E. (Ed.): Architecture as a Translation of Music , Princeton Architectural Press, New York, pp.36-40.

Boyer, M.C. 1996. CyberCities , Princeton Architectural Press, New York, pp.111-113.

Concannon, K. 1990. "Cut and Paste: Collage and the Art of Sound". In D. Lander & M. Lexier (Eds.): Sound by Artists , Art Metropole, Banff, pp.161-182.

Eckel, G. 1996. "Camera Musica: Virtual Architecture as Medium for the Exploration of Music", In Proceedings of the 1996 International Computer Music Conference , pp.358-360.

Hayles, N.K. 1996. "Embodied Virtuality: Or How To Put Bodies Back into the Picture". In M. Moser & D. MacLeod (Eds.): Immersed in Technology , MIT Press, Cambridge, pp.1-28.

Kubisch, K. 1990. "About my Installations". In D. Lander & M. Lexier (Eds.): Sound by Artists , Art Metropole, Banff, pp.69-72.

Mott, I. 1995. "The Talking Chair: Notes on a Sound Sculpture", In Leonardo 28(1): pp.69-70.

Mott, I. & Sosnin, J. 1996. Iain Mott & Jim Sosnin. "A New Method for Interactive Sound Spatialisation". In Proceedings of the 1996 International Computer Music Conference , pp.169-172.

Murphie, A. 1990. 'Negotiating Presence: Performance and New Technologies". In Philip Hayward (Ed.) Culture Technology and Creativity , John Libbey and Company, London pp.209-206.

Polansky, L. 1994. "Live Interactive Computer Music in HMSL, 1984-1992". In Computer Music Journal , 18(2): p.59.

Solomon, R.J. 1976. Urbanisation : The Evolution of an Australian Capital, Angus and Robertson Publishers.

Trimble Navigation Company 1997. Trimble GPS Tutorial . World Wide Web document: http://www.trimble.com/gps/fsections/aa_f1.htm

Westerkamp, H. 1990. "Listening and Soundmaking: A Study of Music-as-Environment". In D. Lander & M. Lexier (Eds.): Sound by Artists , Art Metropole, Banff, pp.227-234.

Young, G. et al. 1993. "Musi-tecture: Seeking Useful Correlations Between Music and Architecture". In Leonardo Music Journal , Vol. 3, pp.39-43.

Sound Mapping

Sound Mapping is a participatory work of sound art made for outdoor environments. The work is installed in the environment by means of a Global Positioning System (GPS), which tracks movement of individuals through the space. Participants wheel four movement-sensitive, sound producing suitcases to realise a composition that spans space as well as time. The suitcases play music in response to nearby architectural features and the movements of individuals. Sound Mapping aims to assert a sense of place, physicality and engagement to reaffirm the relationship between art and the everyday.

Sound Mapping is a collaborative project by Iain Mott, Marc Raszewski and Jim Sosnin. The premier exhibition was staged in Sullivan's Cove, Hobart by the Tasmanian Museum and Art Gallery (TMAG) on 29 January - 15 February, 1998. Sound Mapping was awarded an Honorary Mention in the Interactive Art category of Prix Ars Electronica in Linz, Austria. The project was exhibited as part of the Ars Electronica festival in Linz, Austria in September 1998. It is pictured on this page at the 2004 International Conference on Auditory Display (ICAD) at the Sydney Opera House.

This project is assisted by the New Media Arts Fund of the Australia Council, the Federal Government's arts funding and advisory body. Additional generous support from: Arts Tasmania, Vere Brown leather goods and luggage, Fader Marine, Salamanca Arts Centre, Hobart City Council and the Hobart Summer Festival.

Close: Mute video installation in null extension

Please download the attached PDF below.